Category: Uncategorized

-

Why Self-Host Your Anki Sync?

Anki is the gold standard for spaced repetition learning — used by medical students, language learners, and lifelong learners worldwide. By default, Anki syncs through AnkiWeb, Anki’s official cloud service. But there are good reasons to run your own sync server: full ownership of your data, no upload limits, the ability to share a server with a study group, and the peace of mind that comes with keeping everything on your own hardware.

Anki Sync Server Enhanced wraps the official Anki sync binary in a production-ready Docker image with features you’d expect from a proper self-hosted service — and it’s now submitted to the TrueNAS Community App Catalog for one-click deployment.

What’s Included

🔐User ManagementCreate sync accounts via environment variables. No database setup required.

🔒Optional TLSBuilt-in Caddy reverse proxy for automatic HTTPS with Let’s Encrypt or custom certs.

💾Automated BackupsScheduled backups with configurable retention and S3-compatible storage support.

📊Metrics & DashboardPrometheus-compatible metrics endpoint and optional web dashboard for monitoring.

🐳Docker NativeLightweight Debian-based image. Runs as non-root. Healthcheck included.

⚡TrueNAS ReadySubmitted to the Community App Catalog. Persistent storage, configurable ports, resource limits.

How It Works

Anki Desktop / Mobile → Anki Sync Server Enhanced → TrueNAS StorageYour Anki clients sync directly to your TrueNAS server over your local network or via Tailscale/WireGuard.

The server runs the official

anki-sync-serverRust binary — the same code that powers AnkiWeb — inside a hardened container. Point your Anki desktop or mobile app at your server’s URL, and syncing works exactly like it does with AnkiWeb, just on your own infrastructure.TrueNAS Installation

Once the app is accepted into the Community train, installation is straightforward from the TrueNAS UI. In the meantime, you can deploy it as a Custom App using the Docker image directly.

PR Status: The app has been submitted to the TrueNAS Community App Catalog via PR #4282 and is awaiting review. Track progress on the app request issue #4281.To deploy as a Custom App right now, use these settings:

Connecting Your Anki Client

After the server is running, configure your Anki client to use it. In Anki Desktop, go to Tools → Preferences → Syncing and set the custom sync URL to your server address, for example

http://your-truenas-ip:8080. On AnkiDroid, the setting is under Settings → Sync → Custom sync server. On AnkiMobile (iOS), look under Settings → Syncing → Custom Server.Then simply sync as usual — your Anki client will talk to your self-hosted server instead of AnkiWeb.

Building It: Lessons from TrueNAS App Development

Packaging a Docker image as a TrueNAS app turned out to involve a few surprises worth sharing for anyone considering contributing to the catalog.

TrueNAS apps use a Jinja2 templating system backed by a Python rendering library — not raw docker-compose files. Your template calls methods like

Render(values),c1.add_port(), andc1.healthcheck.set_test()which generate a validated compose file at deploy time. This means you get built-in support for permissions init containers, resource limits, and security hardening for free.One gotcha: TrueNAS runs containers as UID/GID 568 (the

appsuser), not root. If your entrypoint writes to files owned by a different user, it will fail silently or crash. We hit this with astart_time.txtwrite and had to make it non-fatal. Another: the Anki sync server returns a 404 on/(it has no landing page), so the defaultcurl --failhealthcheck marks the container as unhealthy. Switching to a TCP healthcheck solved it cleanly.The TrueNAS CI tooling is solid — a single

ci.pyscript renders your template, validates the compose output, spins up containers, and checks health status. If the healthcheck fails, it dumps full container logs and inspect data, making debugging fast.Get Involved

Ready to Self-Host Your Anki Sync?

Deploy it on TrueNAS today or star the project on GitHub to follow development.

Anki TrueNAS Self-Hosted Docker Spaced Repetition Open Source Homelab -

AI • Model Release

Claude Opus 4.6: Anthropic’s Most Capable Model Yet

Published February 6, 2026 — 8 min read

On February 5, 2026, Anthropic released Claude Opus 4.6 — its most powerful AI model to date. Arriving just three months after Opus 4.5, this update delivers a massive expansion to the context window, a new “agent teams” paradigm for parallel task execution, and benchmark scores that surpass both OpenAI’s GPT-5.2 and Google’s Gemini 3 Pro across a range of evaluations.

Whether you’re a developer building agentic workflows, a knowledge worker producing professional documents, or a researcher wrestling with enormous datasets, Opus 4.6 marks a tangible leap in what an AI model can handle in a single session.

1M Token context window (beta)128K Max output tokens68.8% ARC AGI 2 score$5 / $25 Input / output per 1M tokensWhat’s New in Opus 4.6

While the version bump from 4.5 to 4.6 may seem incremental, the changes under the hood are substantial. Anthropic has focused on three pillars: reasoning depth, context capacity, and agentic execution.

⚡1 Million Token Context Window

A 5× increase over the previous 200K limit. Opus 4.6 scores 76% on the MRCR v2 needle-in-a-haystack benchmark at 1M tokens, compared to just 18.5% for Sonnet 4.5 — a qualitative shift in usable context.🤖Agent Teams in Claude Code

Multiple specialised agents can now split a task and work in parallel — one on the frontend, one on the API, one on a migration — coordinating autonomously. Anthropic reports a roughly 30% reduction in end-to-end task runtime.🧠Adaptive Thinking

Replaces the binary on/off extended thinking toggle. Opus 4.6 dynamically decides how much reasoning effort a prompt requires. Four effort levels (low, medium, high, max) give developers fine-grained cost–speed–quality control.♾️Context Compaction API

A new beta feature that automatically summarises older context as conversations grow, enabling effectively infinite sessions without manual truncation or sliding-window hacks.📊Claude in PowerPoint & Excel Updates

Claude now operates as a side panel inside PowerPoint, respecting your slide masters and layouts. Excel gets unstructured data support and longer workflows for paid subscribers.Benchmark Breakdown

Opus 4.6 sets new state-of-the-art scores on several major evaluations. The most striking result is on ARC AGI 2, a benchmark designed to measure novel problem-solving that is easy for humans but notoriously hard for AI. Opus 4.6 scored 68.8% — nearly double Opus 4.5’s 37.6% and well ahead of GPT-5.2 (54.2%) and Gemini 3 Pro (45.1%).

Benchmark Opus 4.6 Opus 4.5 GPT-5.2 Gemini 3 Pro Terminal Bench 2.0 65.4% 59.8% — — OSWorld (Agentic) 72.7% 66.3% < 72.7% < 72.7% ARC AGI 2 68.8% 37.6% 54.2% 45.1% MRCR v2 (1M ctx) 76% — — — Humanity’s Last Exam #1 — — — Beyond the headline numbers, Opus 4.6 also tops the GDPval-AA benchmark for economically valuable knowledge work, outperforming GPT-5.2 by approximately 144 ELO points. In life sciences, it delivers nearly twice the performance of its predecessor on computational biology, structural biology, organic chemistry, and phylogenetics tests.

Coding and Developer Impact

Coding has always been a strength of the Opus line, and 4.6 takes it further. The model plans more carefully before generating code, catches its own mistakes through improved self-review, and sustains agentic tasks for longer without losing coherence. For large codebases, Anthropic claims it can now handle autonomous code review, debugging, and refactoring across repositories that would have previously required human intervention.

“Opus 4.6 is a model that makes the shift from chatbot to genuine work partner really concrete for our users.” — Scott White, Head of Product, Anthropic

The new agent teams feature in Claude Code is particularly noteworthy. Rather than a single agent working sequentially, developers can now spin up parallel agents that own distinct parts of a task. Anthropic’s example: one agent handles the frontend, another the API layer, and a third manages database migrations — all coordinating autonomously. This is available as a research preview and represents a meaningful step towards multi-agent orchestration out of the box.

Enterprise and Knowledge Work

Anthropic has been explicit about targeting enterprise workflows with this release. Roughly 80% of the company’s business comes from enterprise customers, and Opus 4.6 is tuned for the kind of work they care about: financial analysis, legal research, document production, and multi-step research tasks.

The model now leads on the Finance Agent benchmark and TaxEval by Vals AI. Combined with the expanded context window, analysts can feed entire filings, market reports, and internal data into a single session and get coherent, cross-referenced outputs. Anthropic says Opus 4.6 produces documents, spreadsheets, and presentations that approach expert-created quality on the first pass, reducing the rework cycle significantly.

💡 Pricing Note Standard API pricing remains at $5 input / $25 output per million tokens — identical to Opus 4.5. Requests exceeding 200K input tokens are charged at a premium rate of $10 / $37.50 per MTok. The model is available via the Anthropic API, AWS Bedrock, Google Vertex AI, Microsoft Foundry, and directly on claude.ai.Availability and API Changes

Opus 4.6 is live now across all major platforms. The API model identifier is simply

claude-opus-4-6— note the simplified naming without a date suffix. It’s available on the Anthropic API, AWS Bedrock, Google Vertex AI, Microsoft Foundry, and through GitHub Copilot for Pro, Pro+, Business, and Enterprise users.Developers should be aware of a few breaking changes: assistant message prefilling now returns a 400 error (migrate to structured outputs or system prompt instructions), the

output_formatparameter has moved tooutput_config.format, and the effort parameter is now generally available without a beta header.Safety and Alignment

Anthropic reports that the intelligence gains in Opus 4.6 have not come at the cost of safety. On their automated behavioural audit, the model showed low rates of misaligned behaviours including deception, sycophancy, and encouragement of user delusions — matching Opus 4.5’s results. Six new cybersecurity probes have been added to evaluate potential misuse vectors, and the model achieves a lower rate of unnecessary refusals compared to previous releases.

The Bigger Picture

Opus 4.6 arrives at a moment of intensifying competition. OpenAI announced its new OpenAI Frontier enterprise platform just hours before Anthropic’s launch, signalling a strategic pivot towards infrastructure and agent management rather than competing purely on benchmark scores. Google’s Gemini 3 Pro and Microsoft’s deep integration of Opus 4.6 into Foundry add further complexity to the landscape.

What sets this release apart is the combination of raw capability and practical utility. The 1M context window, agent teams, adaptive thinking, and context compaction aren’t just benchmark optimisations — they address real friction points that developers and knowledge workers hit daily. If Opus 4.5 moved Claude from “chatbot” to “useful tool,” Opus 4.6 positions it as a genuine work partner that can own entire workflows end-to-end.

For those already running Opus 4.5 in production, the upgrade path is a single API version change at the same price point. For everyone else, this is a strong argument to take a serious look at what Claude can do in 2026.

-

Two major releases of rfsrs are now available, bringing custom parameter support, SM-2 migration tools, and — the big one — parameter optimization. You can now train personalized FSRS parameters directly from your Anki review history using R.

Version 0.2.0: Custom Parameters & SM-2 Migration

Critical Bug Fix

Version 0.1.0 had a critical bug: custom parameters were silently ignored. The Scheduler stored your parameters but all Rust calls used the defaults. This is now fixed — your custom parameters actually work.

New Features in 0.2.0

Preview All Rating Outcomes

fsrs_repeat()returns all four rating outcomes (Again/Hard/Good/Easy) in a single call, matching the py-fsrs API:# See all outcomes at onceoutcomes <- fsrs_repeat(stability = 10,difficulty = 5,elapsed_days = 5,desired_retention = 0.9)outcomes$good$stability # 15.2outcomes$good$interval # 12 daysoutcomes$again$stability # 3.1SM-2 Migration

Migrating from Anki’s default algorithm?

fsrs_from_sm2()converts your existing ease factors and intervals to FSRS memory states:# Convert SM-2 state to FSRSstate <- fsrs_from_sm2(ease_factor = 2.5,interval = 30,sm2_retention = 0.9)state$stability # ~30 daysstate$difficulty # ~5Compute State from Review History

fsrs_memory_state()replays a sequence of reviews to compute the current memory state:# Replay review historystate <- fsrs_memory_state(ratings = c(3, 3, 4, 3), # Good, Good, Easy, Gooddelta_ts = c(0, 1, 3, 7) # Days since previous review)state$stabilitystate$difficultyVectorized Operations

fsrs_retrievability_vec()efficiently calculates recall probability for large datasets:# Calculate retrievability for 10,000 cardsretrievability <- fsrs_retrievability_vec(stability = cards$stability,elapsed_days = cards$days_since_review)Scheduler Improvements

Scheduler$preview_card()— see all outcomes without modifying the cardCard$clone_card()— deep copy a card for simulationsfsrs_simulate()— convenience function for learning simulations- State transitions now correctly match py-fsrs/rs-fsrs behavior

Version 0.3.0: Parameter Optimizer

The most requested feature: train your own FSRS parameters from your review history.

Why Optimize?

FSRS uses 21 parameters to predict when you’ll forget a card. The defaults work well for most people, but training custom parameters on your review history can improve scheduling accuracy by 10-30%.

New Functions in 0.3.0

fsrs_optimize()— Train custom parameters from your review historyfsrs_evaluate()— Measure how well parameters predict your memoryfsrs_anki_to_reviews()— Convert Anki’s revlog format for optimization

Optimize Your Parameters

Here’s how to train parameters using your Anki collection:

library(rfsrs)library(ankiR)# Get your review historyrevlog <- anki_revlog()# Convert to FSRS formatreviews <- fsrs_anki_to_reviews(revlog, min_reviews = 3)# Train your parameters (~1 minute)result <- fsrs_optimize(reviews)# Your personalized 21 parametersprint(result$parameters)# Use them with the Schedulerscheduler <- Scheduler$new(parameters = result$parameters,desired_retention = 0.9)How It Works

The optimizer uses machine learning (via the burn framework in Rust) to find parameters that best predict your actual recall patterns. It analyzes your review history to learn:

- How quickly you initially learn new cards

- How your memory decays over time

- How different ratings (Again/Hard/Good/Easy) affect retention

I tested it on my own collection with ~116,000 reviews across 5,800 cards — optimization took about 60 seconds.

Compare Parameters

Evaluate how well different parameters predict your memory:

# Compare default vs optimizeddefault_metrics <- fsrs_evaluate(reviews, NULL)custom_metrics <- fsrs_evaluate(reviews, result$parameters)cat("Default RMSE:", default_metrics$rmse_bins, "\n")cat("Custom RMSE:", custom_metrics$rmse_bins, "\n")Lower RMSE means better predictions.

Bug Fix

Fixed an issue where cards with only same-day reviews (all delta_t = 0) could cause the optimizer to fail. These are now correctly filtered out.

Installation

# From r-universe (recommended)install.packages("rfsrs", repos = "https://chrislongros.r-universe.dev")# Or from GitHubremotes::install_github("open-spaced-repetition/r-fsrs")Note: First build of v0.3.0 takes ~2 minutes due to compiling the ML framework. Subsequent builds are cached.

Full API Summary

Function Description Version fsrs_optimize()Train custom parameters 0.3.0 fsrs_evaluate()Evaluate parameter accuracy 0.3.0 fsrs_anki_to_reviews()Convert Anki revlog 0.3.0 fsrs_repeat()All 4 rating outcomes at once 0.2.0 fsrs_from_sm2()Convert from SM-2/Anki default 0.2.0 fsrs_memory_state()Compute state from review history 0.2.0 fsrs_retrievability_vec()Vectorized retrievability 0.2.0 Scheduler$preview_card()Preview outcomes without modifying 0.2.0 Card$clone_card()Deep copy a card 0.2.0 Links

Feedback and contributions welcome!

-

Damien Elmes says he’s stepping back from being Anki’s bottleneck—without saying goodbye.

Anki’s creator, Damien Elmes (often known as “dae”), shared a major update about the future of Anki: after nearly two decades of largely solo stewardship, he intends to gradually transition business operations and open-source stewardship to the team behind AnkiHub.

The headline reassurance is clear: Anki is intended to remain open source, and the transition is framed as a way to make development more sustainable, reduce single-person risk, and accelerate improvements—especially long-requested quality-of-life and UI polish.

Why this change is happening

Damien described a familiar pattern for long-running open-source projects: as Anki grew in popularity, demands on his time increased dramatically. Over time, the work shifted away from “deep work” (solving interesting technical problems) toward reactive support, constant interruptions, and the stress of feeling responsible for millions of users.

- Time pressure and stress: Unsustainably long hours began affecting well-being and relationships.

- Delegation limits: Paying prolific contributors helped, but many responsibilities remained hard to delegate.

- Bottleneck risk: Relying on one person puts the entire ecosystem at risk if they become unavailable.

Why AnkiHub?

According to the announcement, AnkiHub approached Damien about closer collaboration to improve Anki’s development pace. Through those conversations, Damien concluded that AnkiHub is better positioned to help Anki “take the next level,” in part because they’ve already built a team and operational capacity.

Crucially, Damien also emphasized that he has historically rejected buyout or investment offers due to fears of “enshittification” and misaligned incentives. This new transition is presented as different: it aims to preserve Anki’s values and open-source nature, while removing the single-person bottleneck.

“This is a step back for me rather than a goodbye — I will still be involved with the project, albeit at a more sustainable level.”

What AnkiHub says they believe

In their reply, AnkiHub emphasized that Anki is “bigger than any one person or organization” and belongs to the community. They echoed the principles associated with Anki’s development: respect for user agency, avoiding manipulative design patterns, and focusing on building genuinely useful tools rather than engagement traps.

Commitments and reassurances

- Open source: Anki’s core code is intended to remain open source.

- No investors: They state there are no outside investors influencing decisions.

- No pricing changes planned: They explicitly say no changes to Anki pricing are planned.

- Not a financial rescue: They say Anki is not in financial trouble; this is about improving capacity and resilience.

- Mobile apps continue: They say mobile apps will remain supported and maintained.

- AnkiDroid remains independent: They state there are no plans/agreements changing AnkiDroid’s self-governance.

What might improve (and why users should care)

If the transition works as intended, users may see benefits in areas that are hard to prioritize under constant time pressure:

- Faster development: More people can work without everything bottlenecking through one person.

- UI/UX polish: Professional design support to make Anki more approachable without losing power.

- Better onboarding: Improved first-run experience and fewer rough edges for beginners.

- Stronger add-on ecosystem: Clearer APIs, better docs, fewer breaking changes, more predictable releases.

- Lower “bus factor”: Reduced risk if any one contributor disappears.

Open questions

AnkiHub also acknowledged that many details are still undecided and invited community input. Areas still being worked out include:

- Governance: How decisions are made, who has final say, and how community feedback is incorporated.

- Roadmap: What gets built when, and how priorities are balanced.

- Transition mechanics: How support scales up without breaking what already works.

FAQ

Will Anki remain open source?

Yes. Both Damien and AnkiHub explicitly frame the transition around keeping Anki’s core open source and aligned with the principles the project has followed for years.

Is this a sale or VC takeover?

The announcement positions this as a stewardship transition, not a typical investor-led acquisition. AnkiHub states there are no outside investors involved.

Are pricing changes coming?

AnkiHub says no pricing changes are planned and emphasizes affordability and accessibility.

What about mobile and AnkiDroid?

They say mobile apps will remain supported. AnkiDroid is described as continuing as an independent, self-governed open-source project.

Bottom line

Damien isn’t leaving—he’s stepping back to a more sustainable role. The goal is to remove a long-standing bottleneck, reduce ecosystem risk, and speed up improvements without compromising what makes Anki special.

If you’ve wanted faster progress, better UI polish, and a more resilient future for Anki—this transition is designed to make that possible, while keeping the project open source and community-oriented.

Published: February 2, 2026 • Category: Announcements • Tags: Anki, Open Source, AnkiHub, Study Tools

-

GitHub has officially rolled out the improved “Files Changed” experience as the default for all users. After months in public preview, this redesigned pull request review interface brings significant improvements to performance, accessibility, and overall productivity when reviewing code changes.

Key Improvements Over the Classic Experience

The new interface maintains familiarity for existing users while adding several notable enhancements:

Comment on Any Line

Previously, you could only comment on lines directly surrounding a change. Now you can add review comments to any line in a changed file, making it easier to provide context or point out related code that might need attention.

View PR Description Without Switching Pages

A new Overview panel lets you view the pull request description directly from the “Files changed” page. No more jumping back and forth between tabs to remember what the PR is supposed to accomplish.

Enhanced File Tree

The file tree sidebar is now resizable and includes visual indicators showing which files have comments, errors, or warnings. This makes it much easier to track your progress when reviewing large PRs with many changed files.

Draft Comments That Persist

Comments and replies are now saved locally in your browser. If you accidentally close the tab or refresh the page, your in-progress feedback won’t be lost.

Fewer Page Reloads

Actions like refreshing to pull in new changes, switching between split and unified diff modes, and other common tasks no longer force a full page reload. The interface feels much snappier as a result.

Improved Accessibility

The new experience includes better keyboard navigation, screen reader landmarks, and increased line spacing options to make code review accessible to everyone.

Experimental Mode for Large Pull Requests

One of the most interesting additions is an experimental mode specifically designed for reviewing large pull requests. This mode uses virtualization to reduce the number of DOM elements the browser needs to manage, significantly improving memory usage and page responsiveness—especially on slower machines.

When viewing a large PR, you’ll see a banner offering to try this experimental mode. There are some trade-offs: browser find functionality, text selection across the entire page, printing, and some browser extensions may not work as expected since the full diff isn’t rendered in the DOM. You can switch back to single file mode at any time.

Bug Fixes and Polish

GitHub has also addressed numerous issues including problems with suggested changes being applied incorrectly, comment workflow bugs, interaction lag (especially on Safari), and various UI quirks like scroll positioning and sticky headers behaving unexpectedly.

Opting Out

If you prefer the classic experience, you can still opt out through your settings. However, given the improvements in this new version, it’s worth giving it a fair trial before switching back.

Providing Feedback

GitHub is actively collecting feedback on the new experience. If you encounter issues or have suggestions, you can participate in the “Files Changed” preview feedback discussion on GitHub.

-

Introducing ankiR Stats: The Only Anki Addon with Time Series Analytics

I’m excited to announce the release of ankiR Stats, a new Anki addon that brings advanced statistical analysis to your flashcard reviews. If you’ve ever wondered about the patterns hidden in your study data, this addon is for you.

Why Another Stats Addon?

There are several statistics addons for Anki already – Review Heatmap, More Overview Stats, True Retention. They’re great for basic numbers. But none of them answer questions like:

- Is my retention trending up or down over time?

- What’s my weekly study pattern? Do I study more on weekends?

- Which days were unusually productive (or lazy)?

- How are my card intervals growing over months?

ankiR Stats answers all of these using the same statistical techniques data scientists use.

Features

📊 Time Series Charts

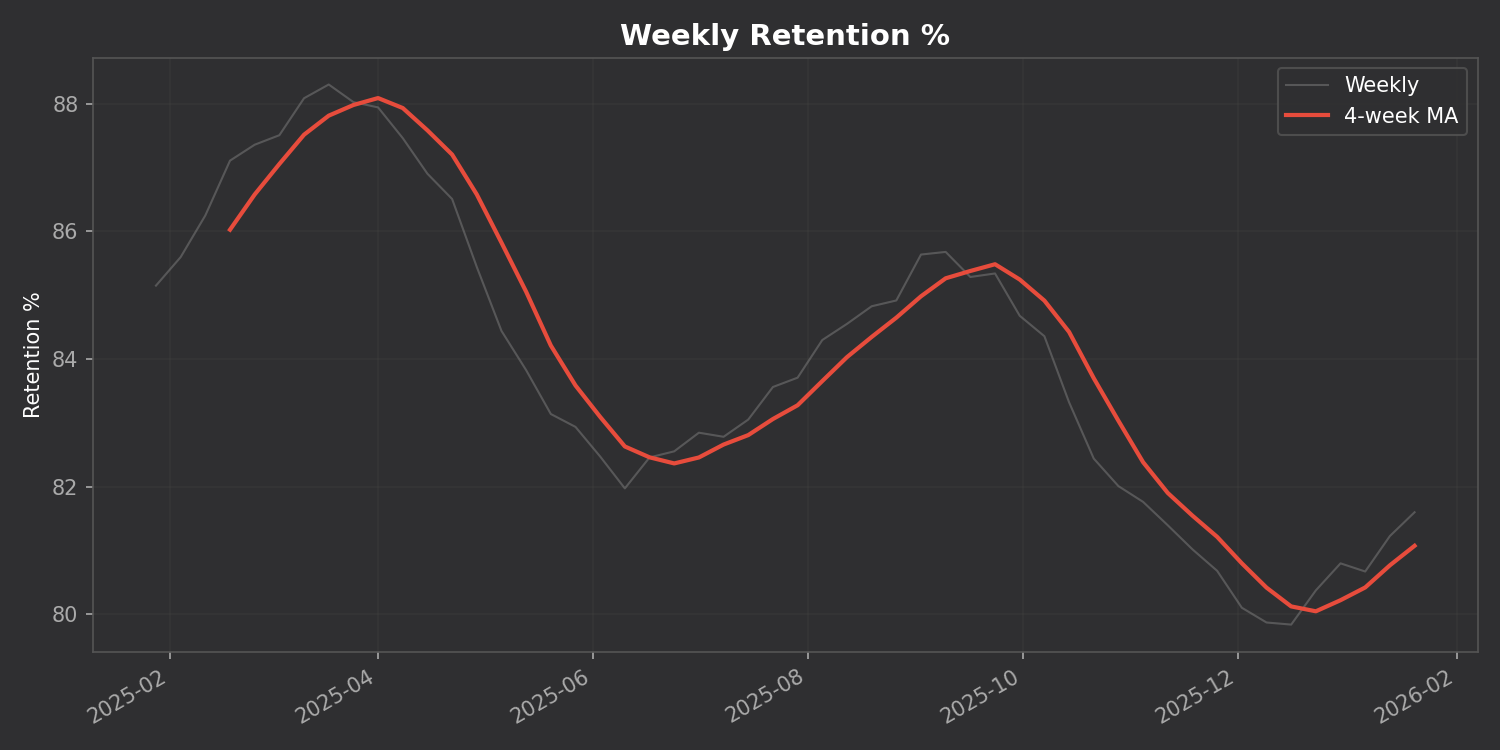

Track your retention, reviews, and intervals over time with a 4-week moving average to smooth out the noise:

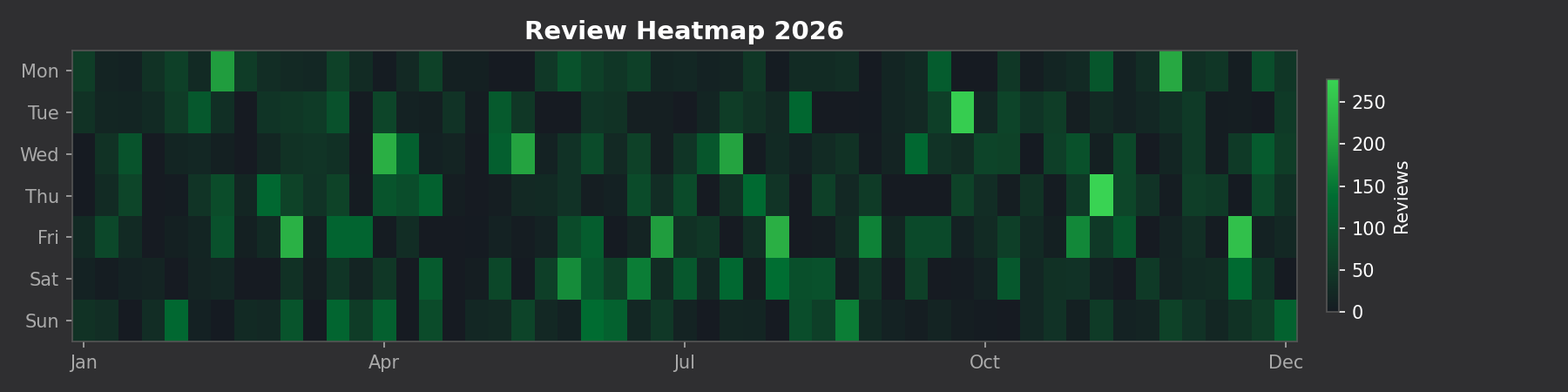

🗓️ GitHub-style Heatmap

See your entire year of reviews at a glance:

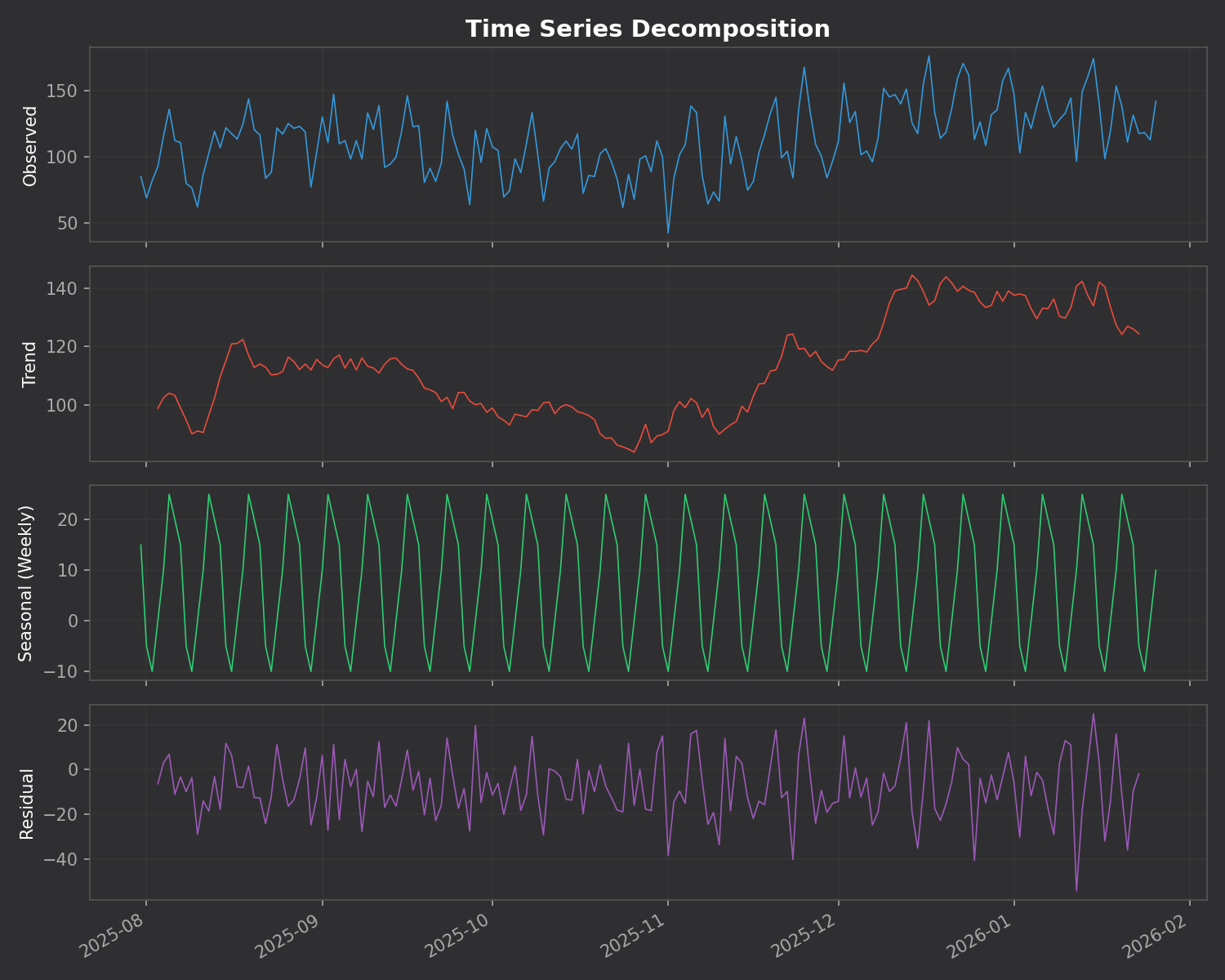

🔬 Time Series Decomposition

This is the killer feature. The addon breaks down your daily reviews into three components:

- Trend – Are you studying more or less over time?

- Seasonal – Your weekly pattern (which days you study most)

- Residual – Random variation that doesn’t fit the pattern

⚠️ Anomaly Detection

The addon automatically finds unusual study days using z-score analysis. Days where you studied way more (or less) than normal are flagged with their statistical significance.

No Dependencies

Unlike many addons that require you to install Python packages, ankiR Stats uses web-based charts (Chart.js). It works out of the box on Windows, Mac, and Linux.

Installation

- Open Anki

- Go to Tools → Add-ons → Get Add-ons

- Enter code:

419954163 - Restart Anki

- Access via Tools → ankiR Stats

Based on ankiR

This addon is a Python port of key features from ankiR, an R package I developed for comprehensive Anki analytics. The R package has 91 functions including forecasting, autocorrelation analysis, and FSRS integration – if you want even deeper analysis, check it out.

Open Source

The addon is open source and available on GitHub. Issues and contributions welcome!

Links

Let me know what you think in the comments!

-

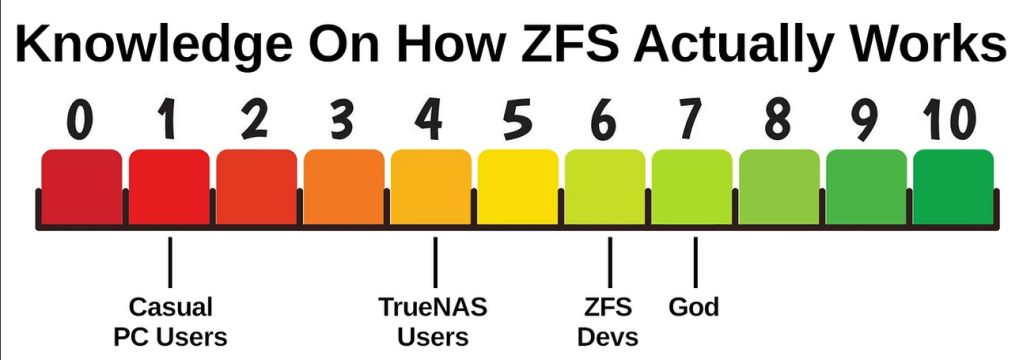

As someone who uses Anki extensively for medical studies, I’ve always been fascinated by the algorithms that power spaced repetition. When the FSRS (Free Spaced Repetition Scheduler) algorithm emerged as a more accurate alternative to Anki’s traditional SM-2, I wanted to bring its power to the R ecosystem for research and analysis.

The result is rfsrs — R bindings for the fsrs-rs Rust library, now available on r-universe.

Install it now:

install.packages("rfsrs", repos = "https://chrislongros.r-universe.dev")What is FSRS?

FSRS is a modern spaced repetition algorithm developed by Jarrett Ye that models memory more accurately than traditional algorithms. It’s based on the DSR (Difficulty, Stability, Retrievability) model of memory:

- Stability — How long a memory will last (in days) before dropping to 90% retrievability

- Difficulty — How hard the material is to learn (affects stability growth)

- Retrievability — The probability of recalling the memory at any given time

FSRS-6, the latest version, uses 21 optimizable parameters that can be trained on your personal review history to predict optimal review intervals with remarkable accuracy.

The Rating Scale

FSRS uses a simple 4-point rating scale after each review:

1AgainBlackout2HardStruggled3GoodCorrect4EasyEffortlessWhy Rust + R?

The reference implementation of FSRS is written in Rust (fsrs-rs), which provides excellent performance and memory safety. Rather than rewriting the algorithm in R, I used rextendr to create native R bindings to the Rust library.

This approach offers several advantages:

- Performance — Native Rust speed for computationally intensive operations

- Correctness — Uses the official, well-tested implementation

- Maintainability — Updates to fsrs-rs can be easily incorporated

- Type Safety — Rust’s compiler catches errors at build time

Architecture

Here’s how rfsrs connects R to the Rust library:

rfsrs ArchitectureR Layerfsrs_default_parameters() fsrs_initial_state() fsrs_next_state() fsrs_retrievability()▼rextendr BridgeR → RustVectors → Vec, Lists → structsRust → Rf64 → numeric, structs → lists▼Rust Layer (fsrs-rs)AlgorithmFSRS-6, 21 paramsMemoryStatestability, difficultyFunctionsnext_states(), etc.Usage Examples

Getting Started

library(rfsrs) # Get the 21 default FSRS-6 parameters params <- fsrs_default_parameters() # Create initial memory state (rating: Good) state <- fsrs_initial_state(rating = 3) # $stability: 2.3065 # $difficulty: 2.118104Tracking Memory Decay

# How well will you remember? for (days in c(1, 7, 30, 90)) { r <- fsrs_retrievability(state$stability, days) cat(sprintf("Day %2d: %.1f%%\n", days, r * 100)) } # Day 1: 95.3% # Day 7: 76.4% # Day 30: 49.7% # Day 90: 26.5%Note: Stability of 2.3 days means memory drops to 90% retrievability after 2.3 days. This increases with each successful review.Use Cases for R

- Research — Analyze spaced repetition data with R’s statistical tools

- Visualization — Plot memory decay curves with ggplot2

- Integration with ankiR — Combine with ankiR to analyze your Anki collection

- Custom schedulers — Build spaced repetition apps in R/Shiny

Building Rust + R Packages

The rextendr workflow:

- Create package with

usethis::create_package() - Run

rextendr::use_extendr() - Write Rust with

#[extendr]macros - Run

rextendr::document() - Build and check

Windows builds: Cross-compiling Rust for Windows can be tricky. My r-universe builds work on Linux and macOS but fail on Windows. Windows users can install from source with Rust installed.Resources

- rfsrs on r-universe

- rfsrs on GitHub

- fsrs-rs — The Rust library

- ABC of FSRS — Algorithm intro

- rextendr — R + Rust bindings

What’s Next

Future plans include parameter optimization (training on your review history), batch processing, and tighter ankiR integration.

If you’re interested in spaced repetition or memory research, give rfsrs a try. Feedback welcome!

-

What’s Changed

- Helm Chart

- chart: Set admin metrics port to http port by @sheyabernstein in #7936

- fix: Invalid volume mount conditional in filer template by @nichobi in #7992

- S3 API

- Fix S3 list objects marker adjustment for delimiters by @chrislusf in #7938

- fix: directory incorrectly listed as object in S3 ListObjects by @chrislusf in #7939

- Refine Bucket Size Metrics: Logical and Physical Size by @chrislusf in #7943

- Fix AWS SDK Signature V4 with STS credentials (issue #7941) by @chrislusf in #7944

- fix: correcting S3 nil cipher dereference in filer init by @tjasko in #7952

- Support AWS standard IAM role ARN formats (issue #7946) by @chrislusf in #7948

- s3api: fix authentication bypass and potential SIGSEGV (Issue #7912) by @chrislusf in #7954

- store S3 storage class in extended atrributes #7961 by @ravenschade in #7962

- fix: handle range requests on empty objects (size=0) by @chrislusf in #7963

- Fix trust policy wildcard principal handling by @chrislusf in #7970

- Support Policy Attachment for Object Store Users by @chrislusf in #7981

- Fix STS identity authorization by populating PolicyNames (#7985) by @chrislusf in #7986

- Fix: ListObjectVersions delimiter support by @chrislusf in #7987

- Fix STS authorization in streaming/chunked uploads by @chrislusf in #7988

- fix(s3api): ensure S3 configuration persistence and refactor authorization tests by @chrislusf in #7989

- Misc

- Standardize -ip.bind flags to default to empty and fall back to -ip by @chrislusf in #7945

- Fix unaligned 64-bit atomic operation on ARM32 (#7958) by @aimmac23 in #7959

- Fix flaky EC integration tests by collecting server logs on failure by @chrislusf in #7969

- test: fix EC integration test needle blob mismatch by @chrislusf in #7972

- chore: execute goimports to format the code by @promalert in #7983

- Filer

- fix(gcs): resolve credential conflict and improve backup logging by @chrislusf in #7951

- Fix jwt error in Filer pod (k8s) by @MorezMartin in #7960

- Fix chown Input/output error on large file sets by @chrislusf in #7996

- Admin

- fix: EC UI template error when viewing shard details by @chrislusf in #7955

- Fix special characters in admin-generated secret keys by @chrislusf in #7994

- FUSE Mount

- Fix: prevent panic when swap file creation fails by @LeeXN in #7957

- Enable writeback_cache and async_dio FUSE options by @chrislusf in #7980

- Mini

- feat: add flags to disable WebDAV and Admin UI in weed mini by @chrislusf in #7971

- Volume Server

- storage/needle: add bounds check for WriteNeedleBlob buffer by @chrislusf in #7973

- opt: reduce ShardsInfo memory usage with bitmap and sorted slice by @chrislusf in #7974

- Helm Chart